No matter what technological area expertise, patching is always a requirement. This is no different in the life of a DBA. One of the tasks is to ensure that the environment is kept up to date with new releases and security updates but are you able to automate patching? The answer, yes but carefully.

There are a few questions that should be answered when approaching the subject of automated patching:

- Has a backup been taken?

- Is the environment ready for patching?

- Is the Databases ready for patching?

- Is the server a member of an AlwaysOn availability group? Can it be failed over safely?

- Is there a backout plan for if anything goes wrong?

- Can we send out an alter in the event of a failed backup?

When out team was approached with creating a method of deploying automated patching for SQL servers, we took the safe approach and made sure the automated process was aware of the environment that it was patching and would only patch if it met specific requirements. There are a few queue areas that this post will concentrate on to make sure that each patch installed is ether successful or an alert is sent out for a failure. What we would like to avoid is silent failures.

Logging

When a patch job is launched on a server, the first step in the process is to setup a patching log file. This step is critical, and the entire job will fail with an exit code if the process of log creation does not meet the following requirements:

- Does the Log Directory exist? If not attempt to create it and fail if it is not possible

- Does a Log exist? We can't overwrite old logs we need a method of re-naming old log files while only keeping a specific number for housekeeping. If no log exists create a new log

- Can I successfully write to the log file? If not then the job fails.

Once logging has been verified, we can continue with the patching process now that we know we have a central location shared between all steps to send output. From this point on, we can have a failure with the execution of certain steps based on the output but being able to log data is a critical step that should fail the entire patching process if not successful.

Investigation

The next phase of the patching process is to gather information about the current environment targeted and to check to make sure it meets patching requirements. Some of the information that is gathered to be used for further analysis later on in the patching process:

- The name(s) and number of installed Instances

- The SQL Port of the Engine Service

- The SQL Cluster name (if there is one)

- Is HADR enabled on the Instance

- Verify that the account used for patching has appropriate SQL permissions

- Verify that the account used for patching has appropriate OS permissions

- AlwaysOn Availability group information and roles

- AlwaysOn Availability group listener information

- Disk space

- Database health

- OS pending reboot status

Logic

Based on the information found during the Investigation phase now the fun part begins. If we setup our job with all of the information gathering contained within the investigation phase, you can then make a small sub job that can be run before the patching process that will pre-check the environment before the full automation patch job is launched. This way small or easy failures can be corrected before a full patching job is launched on the SQL Server.

The Logic phase will act on the information and run specific tasks to prepare the server for patching. The responsibility of a DBA is to maintain SQL server availability and data integrity and blindly patching a server can compromise both of those responsibilities. Some of the Logic used before patching are as follows:

- Reboot the server if has a pending reboot and pick up where it left off after a reboot

- Check Database status and correct as needed

- Launch a standardized backup job and launch a automated backup process if no standardized job is found

- If AlwaysOn exists, check for failover mode and data synchronization type, failover if possible so we are only patching on a Secondary.

- Verify AlwaysOn secondary status and patch level before patching primary if a failover is not possible

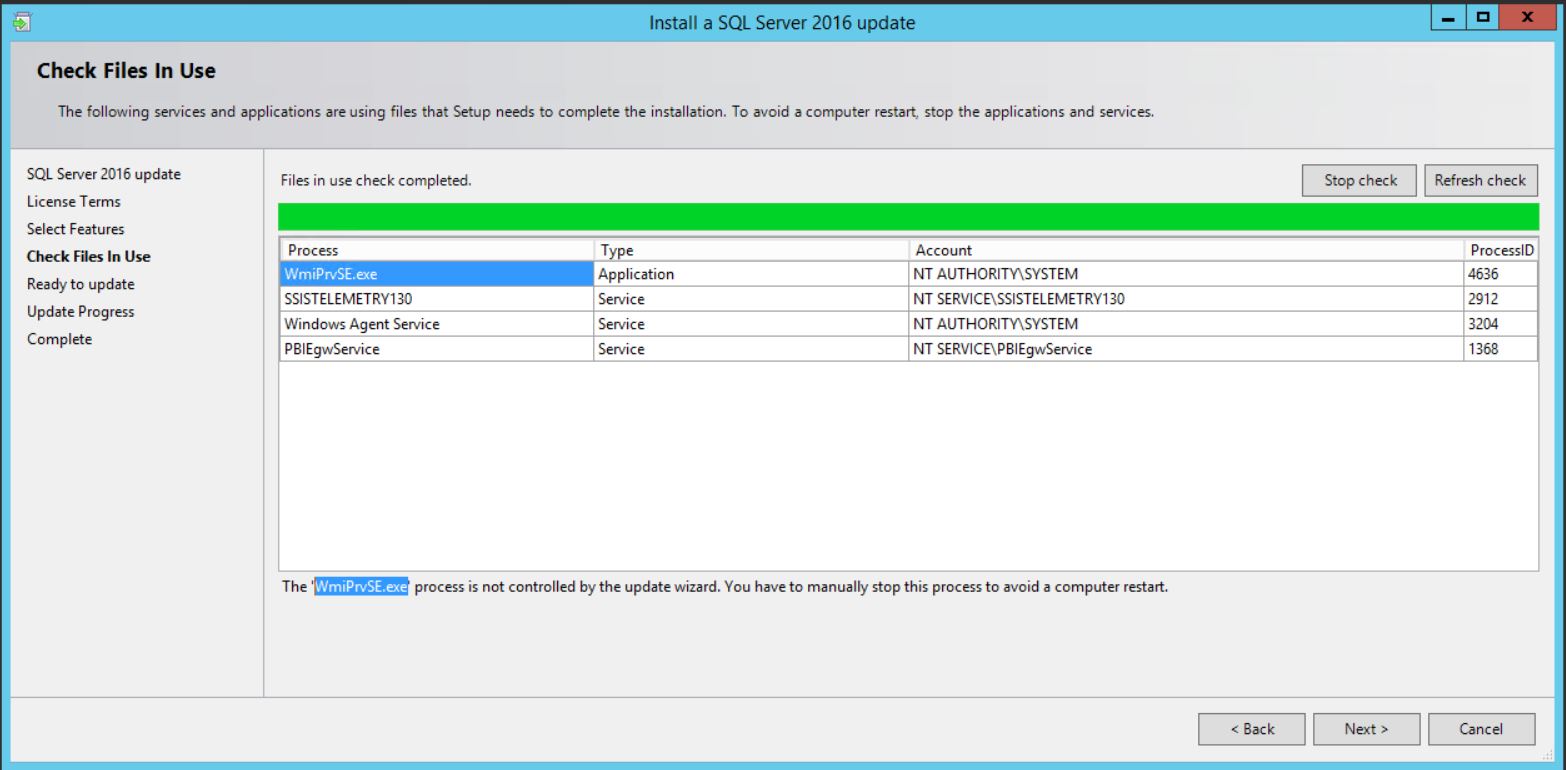

- Check for monitoring services and cleanly shut them down

Patching

When entering the patching phase, we are at the point of no return. Once monitoring has been disabled on the server, we can't have any of the process fail to run as we would be left with an un-monitored environment. In out process, turning off monitoring is the last step before patching. Enabling monitoring again is the first step after a patch has completed it's executing. At this point we are not verifying that patching is successful, we are just making sure we have a monitored environment and alerts are able to be sent out in the event of a failure. This phase is the watchdog patching sandwich.

Validations

After monitoring has started successfully, we move on the validation phase with tasks that read through the SQL patching log files for events and exit codes to verify that the patching process has completed successfully. If an error is discovered, an alert can be generated with the severity of the system being patched currently.

Conclusion

The dilemma of patching SQL server through an automated process while maintaining the requirements of a database admin for data integrity and availability, can be accomplish through the means of some logic and smart patching processes that verifies and corrects issues before the patching process has been launched.